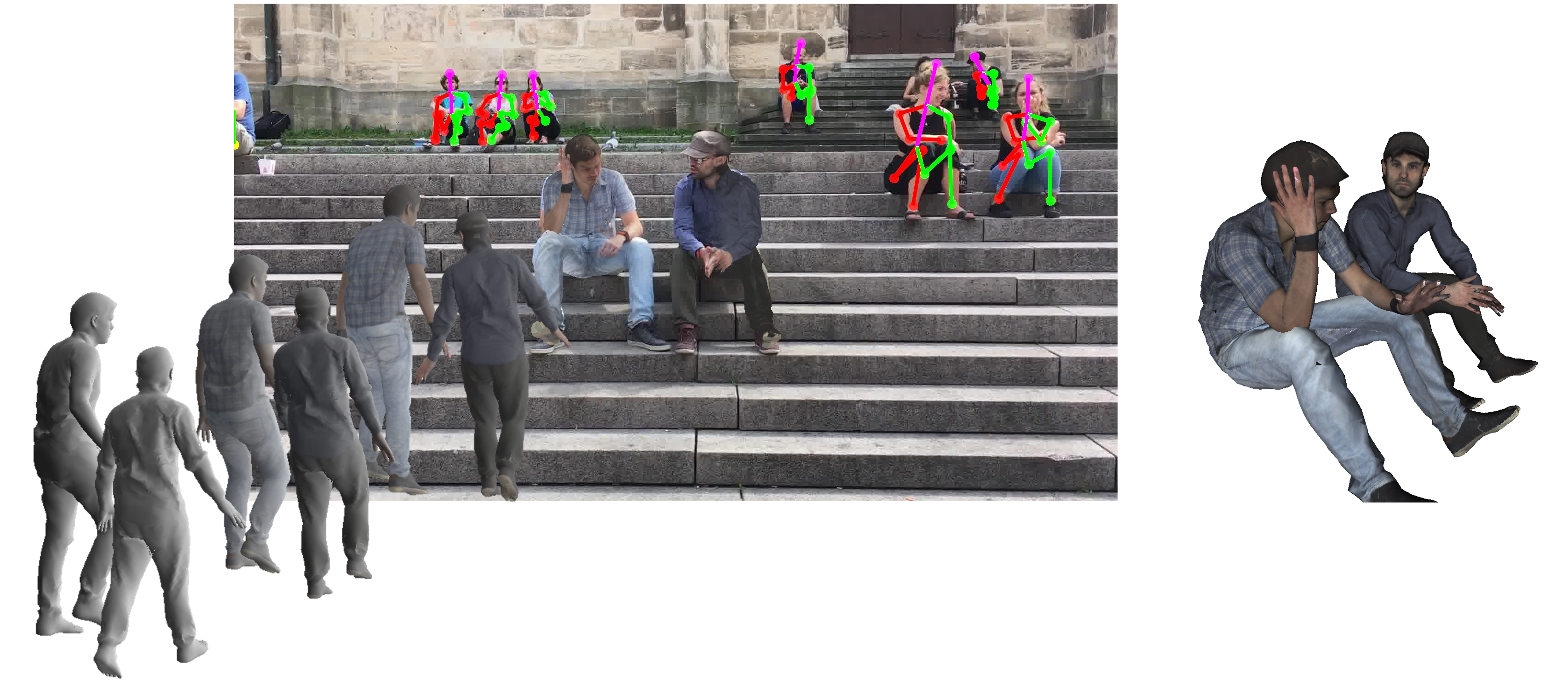

Figure 1. Multi-person pose estimation in the wild using Video Inertial Poser (VIP). VIP combines video obtained from a handheld smartphone camera with data coming from body-worn inertial measurement units

(IMUs).

Figure 1. Multi-person pose estimation in the wild using Video Inertial Poser (VIP). VIP combines video obtained from a handheld smartphone camera with data coming from body-worn inertial measurement units

(IMUs).

Accurately capturing human motions is the premise of understanding human activity, modelling human-scene interactions, and synthesizing novel motions. Wearable tracking devices such as Inertial Measurement Units (IMUs) offer a lightweight yet robust solution to human motion capture.

IMU-based systems do not suffer from limited capturing volume. Hence they are the optimal choice for outdoor recording. However, commercial IMU systems are quite intrusive given the large number of sensors attached to the body. To address this problem, we proposed Sparse Inertial Poser [1], a tracker which enables motion capture in the wild with only 6 sensors. This is achieved by utilizing the anthropometric constraints of a statistical body model and joint optimization across all frames. In the follow-up work Deep Inertial Poser [2], we learned the temporal motion prior with a deep neural network instead of optimizing temporal dependencies. The resulting tracker can reconstruct human poses from 6 IMU sensors in real-time.

IMUs typically suffer from heading drift. As the result, positional errors accumulate over time. An external camera was introduced in several works to obtain accurate position information and provide additional visual cues. In [3], we proposed a hybrid tracker that integrates IMU measurements with a video-based tracker. The problem is formulated as a system of linear equations and can be efficiently solved. In [4], we tackled a more difficult task: multi-person pose estimation in the wild (Figure. 1). To overcome the challenges such as cluttered background and occlusions, we solved a novel graph-based optimization problem to enforce intra-frame and inter-frame coherency. Human poses, camera parameters and heading drift are then jointly optimized.

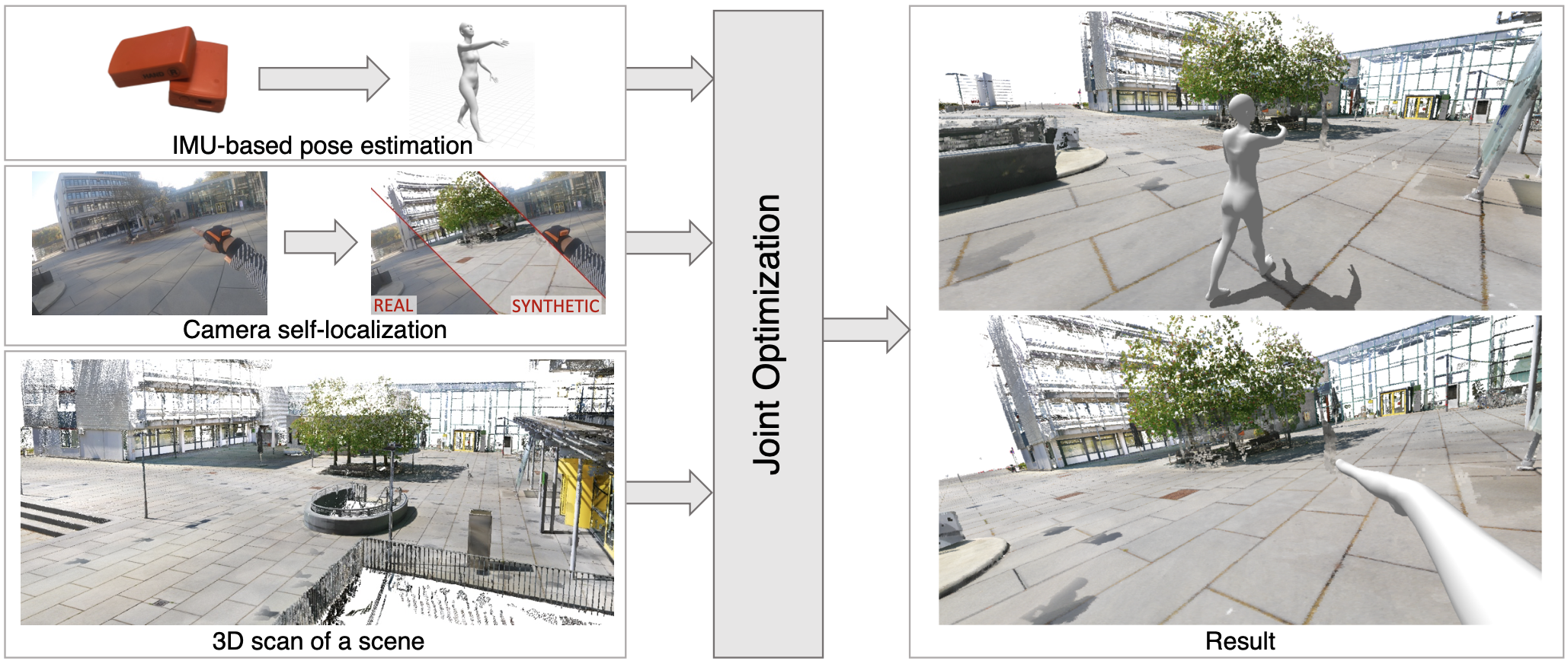

Figure 2. HPS jointly estimates the full 3D human pose and location of a subject within large 3D scenes using IMUs and a head-mounted camera.

Figure 2. HPS jointly estimates the full 3D human pose and location of a subject within large 3D scenes using IMUs and a head-mounted camera.

Using an external camera severely limits the capturing volume for a motion capture session. Recently, we explored using a head-mounted camera in human motion capture. In SelfPose [5], we proposed a state-of-the-art method to estimate egocentric body poses from a head-mounted fisheye camera. We also used both a head-mounted camera and IMUs in our more recent work, HPS [6] for simultaneous human tracking and localization in a large pre-scanned scene, as shown in Figure. 2. It opens up many possibilities for VR/AR applications where people interact with scenes.

References

-

Von Marcard, Timo, et al. "Sparse inertial poser: Automatic 3d human pose estimation from sparse imus." Computer Graphics Forum. Vol. 36. No. 2. 2017.

-

Huang, Yinghao, et al. "Deep inertial poser: Learning to reconstruct human pose from sparse inertial measurements in real time." ACM Transactions on Graphics (TOG) 37.6 (2018): 1-15.

-

Pons-Moll, Gerard, et al. "Multisensor-fusion for 3d full-body human motion capture." 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. IEEE, 2010.

-

Pons-Moll, Gerard, et al. "von Marcard, Timo, et al. "Recovering accurate 3d human pose in the wild using imus and a moving camera." Proceedings of the European Conference on Computer Vision (ECCV). 2018.

-

Tome, Denis, et al. "SelfPose: 3D Egocentric Pose Estimation from a Headset Mounted Camera." IEEE Transactions on Pattern Analysis and Machine Intelligence, 2020.

-

Guzov, Vladimir, et al. "Human POSEitioning System (HPS): 3D Human Pose Estimation and Self-localization in Large Scenes from Body-Mounted Sensors." Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2021.