Human POSEitioning System (HPS): 3D Human Pose Estimation and Self-localization in Large Scenes from Body-Mounted Sensors

Vladimir Guzov* 1,2, Aymen Mir* 1,2, Torsten Sattler 3 Gerard Pons-Moll 1,2*Joint first authors with equal contribution

1University of Tübingen, Germany

2Max Planck Institute for Informatics, Saarland Informatics Campus, Germany

3CIIRC, Czech Technical University in Prague, Czech Republic

CVPR 2021 Virtual

Abstract

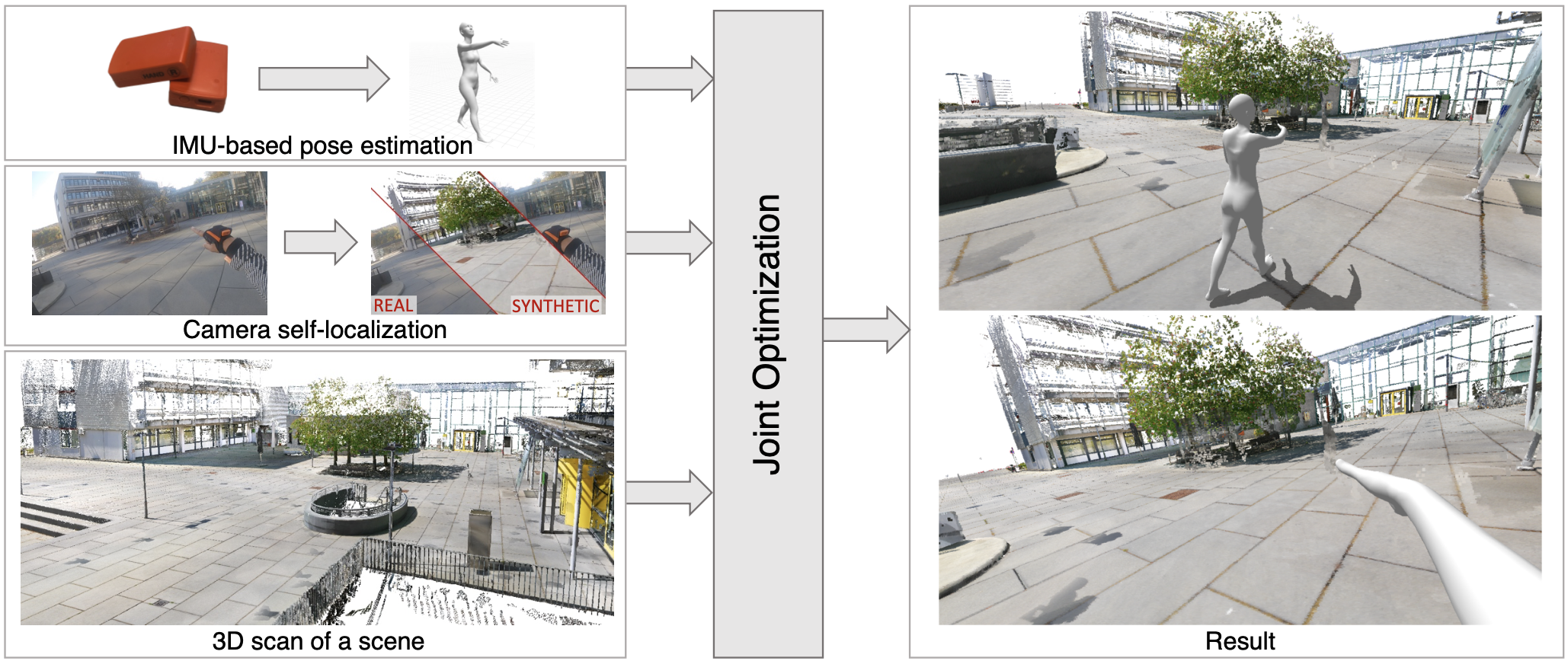

We introduce (HPS) Human POSEitioning System, a method to recover the full 3D pose of a human registered with a 3D scan of the surrounding environment using wearable sensors. Using IMUs attached at the body limbs and a head mounted camera looking outwards, HPS fuses camera based self-localization with IMU-based human body tracking. The former provides drift-free but noisy position and orientation estimates while the latter is accurate in the short-term but subject to drift over longer periods of time. We show that our optimization-based integration exploits the benefits of the two, resulting in pose accuracy free of drift. Furthermore, we integrate 3D scene constraints into our optimization, such as foot contact with the ground, resulting in physically plausible motion. HPS complements more common third-person-based 3D pose estimation methods. It allows capturing larger recording volumes and longer periods of motion, and could be used for VR/AR applications where humans interact with the scene without requiring direct line of sight with an external camera, or to train agents that navigate and interact with the environment based on first-person visual input, like real humans. With HPS, we recorded a dataset of humans interacting with large 3D scenes (300-1000 sq.m) consisting of 7 subjects and more than 3 hours of diverse motion.

Citation

@inproceedings{HPS,

title = {Human POSEitioning System (HPS): 3D Human Pose Estimation and Self-localization in Large Scenes from Body-Mounted Sensors},

author = {Guzov, Vladimir and Mir, Aymen and Sattler, Torsten and Pons-Moll, Gerard},

booktitle = {{IEEE} Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {jun},

organization = {{IEEE}},

year = {2021},

}

Acknowledgments

We thank Bharat Bhatnagar, Verica Lazova, Anna Kukleva and Garvita Tiwari for their feedback. This work is partly funded by the DFG - 409792180 (Emmy Noether Programme, project: Real Virtual Humans), the EU Horizon 2020 project RICAIP (grant agreeement No.857306), and the European Regional Development Fund under project IMPACT (No. CZ.02.1.01/0.0/0.0/15 003/0000468). The project was made possible by funding from the Carl Zeiss Foundation.