COUCH: Towards Controllable Human-Chair Interactions

Xiaohan Zhang, Bharat Lal Bhatnagar, , Sebastian Starke , Vladimir Guzov , Gerard Pons-Moll1University of Tubingen, Germany 2Max Planck Institute for Informatics, Saarland Informatics Campus, Germany 3Electronic Arts 4University of Edinburgh, United Kingdom

Overview

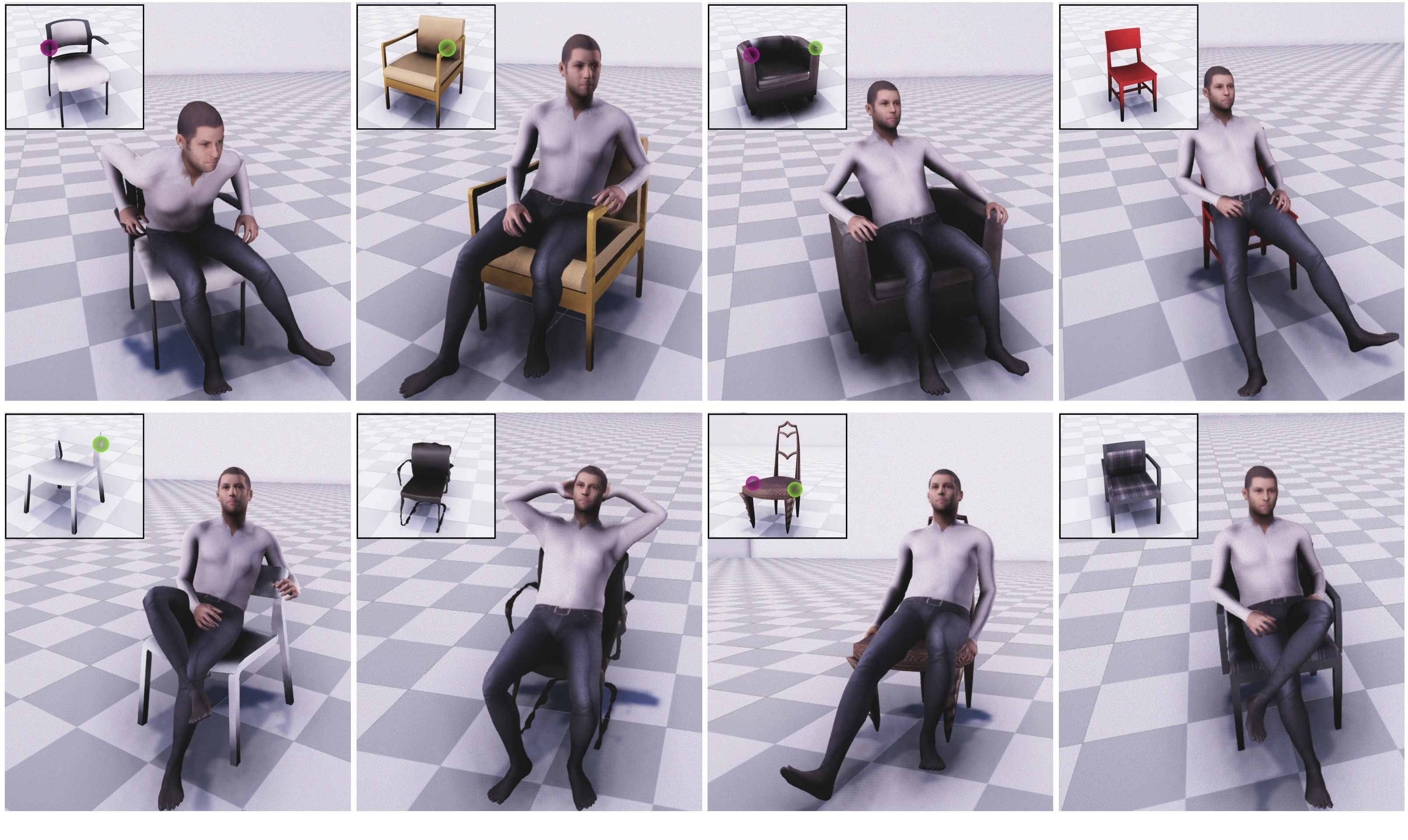

COUCH - A dataset and model to synthesizes controllable, contact-based human-chair interactions.

We propose the first method that enables control over synthesized scene-interaction where the user can either specify or sample hand contacts on the chair surface to achieve different styled interactions. Our model generalizes to any starting locations and to different contacts.

Abstract

Humans interact with an object in many different ways by making contact at different locations, creating a highly complex motion space that can be difficult to learn, particularly when synthesizing such human interactions in a controllable manner. Existing works on synthesizing human scene interaction focus on the high-level control of action but do not consider the fine-grained control of motion. In this work, we study the problem of synthesizing scene interactions conditioned on different contact positions on the object. As a testbed to investigate this new problem, we focus on human-chair interaction as one of the most common actions which exhibit large variability in terms of contacts. We propose a novel synthesis framework COUCH that plans ahead the motion by predicting contact-aware control signals of the hands, which are then used to synthesize contact-conditioned interactions. Furthermore, we contribute a large human-chair interaction dataset with clean annotations, the COUCH Dataset. Our method shows significant quantitative and qualitative improvements over existing methods for human-object interactions. More importantly, our method enables control of the motion through user-specified or automatically predicted contacts.

COUCH Dataset

Learning contact-based controlling calls for a rich dataset with diverse styles of interaction and accurately labelled contacts, which currently does not exist. We developed a robust method for capturing the human and the object which integrates data from 4 kinects and inertial sensors worn on the body of the subject. After processing, a dataset with a diverse range of ways of human contacting and interacting with chairs is then built.

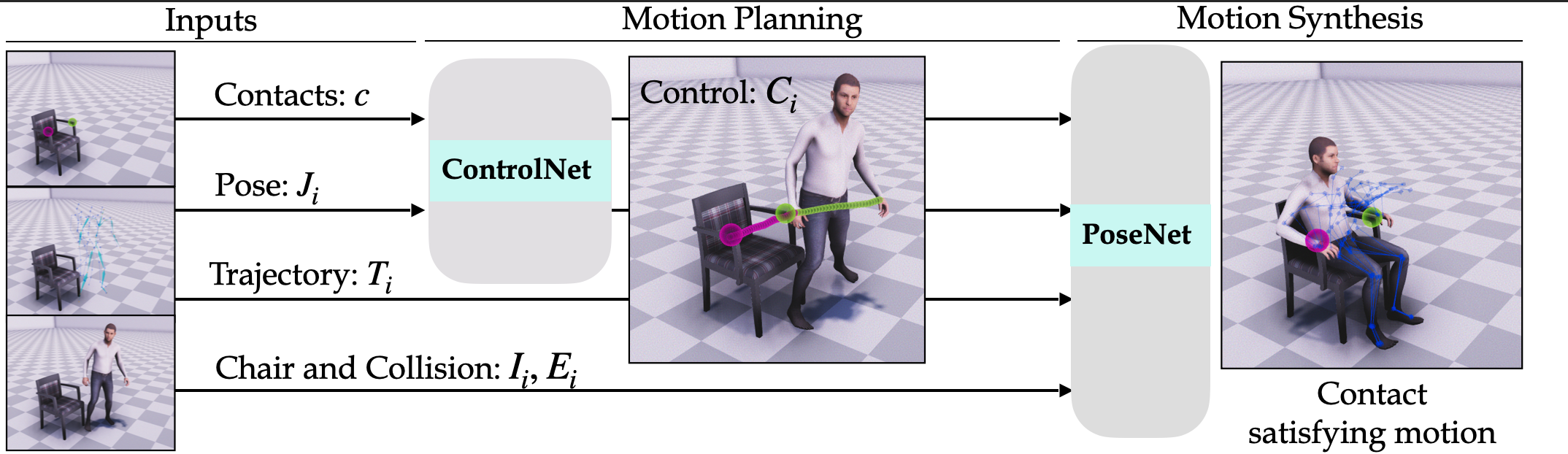

Key Insight

Given contact constraints and the current pose, our key insight is to decompose the sitting synthesis into motion planning and motion prediction. The ControlNet performs motion planning in the form of future hand trajectories. Our PoseNet uses the predicted control signals to generate contact satisfying motion.

Demos

Generalization to Different Starting Points. COUCH can generalize to different starting points. Given the same chair model and the same contact positions, COUCH can generate contact-satisfying motion regardless the starting state of the virtual human.

Sampling mode with predicted contacts. COUCH allows the user to generate controllable interaction with sampled contacts. Our novel neural network ContactNet enables the user to sample a diverse range of contact points on the chair to control the motion synthesis. At the sampling mode, with the same pose initialization, different sampled contacts on the chair surface are conditioned to synthesize different styled, contact-based interactions.

Full Video

Download

For further information about the COUCH dataset and for download links, please click hereCitation

@article{zhang2022couch,

title = {COUCH: Towards Controllable Human-Chair Interactions},

author = {Zhang, Xiaohan and Bhatnagar, Bharat Lal and Starke, Sebastian and Guzov, Vladimir and Pons-Moll, Gerard},

booktitle = {European Conference on Computer Vision ({ECCV})},

month = {October},

organization = {{Springer}},

year = {2022}

}

Acknowledgments

This work is funded by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) - 409792180 (Emmy Noether Programme, project: Real Virtual Humans), and German Federal Ministry of Education and Research (BMBF): T¨ubingen AI Center, FKZ: 01IS18039A. Gerard Pons-Moll is a member of the Machine Learning Cluster of Excellence, EXC number 2064/1 – Project number 390727645. The project was made possible by funding from the Carl Zeiss Foundation.