Description

The number of research papers on 3D pose estimation, and in particular in un-controlled settings has dramatically increased recently. Most papers demonstrate performance qualitatively, showing images and the corresponding 3D body model or stick-figure side by side. Quantitative evaluation is limited to indoor datasets such as H3.6M or Human-Eva, or outdoor datasets with recording volume like MuPoTs-3D. This changed with 3DPW, which constitutes the only dataset with accurate reference 3D poses in natural scenes (e.g., people shopping in the city, having coffee, or doing sports recorded with a moving hand-held camera). While researchers started using this dataset, the evaluation, protocols and sequences differ from paper to paper. This makes comparison of methods difficult. The purpose of this workshop and challenge is to standardize protocols and metrics so that researchers compare their methods in a consistent manner in future publications, ultimately to advance the state of the art in 3D human pose estimation in the wild. The tentative program consists in invited talks and the 3 top performing methods of the 3DPW-Challenge, and a poster session for workshop papers and invited relevant papers from the main conference.

Media

Our challenge was featured in the in the June Edition of Computer Vision News

Challenge

For information about tracks, evaluation, and metrics, see 3DPW Challenge.

Paper submission is not mandatory (but encouraged) for participation in the challenge.

The three top performing methods (2 top performing methods in joint position accuracy, and top performing method on part orientation accuracy) will be invited to give a talk at the workshop.

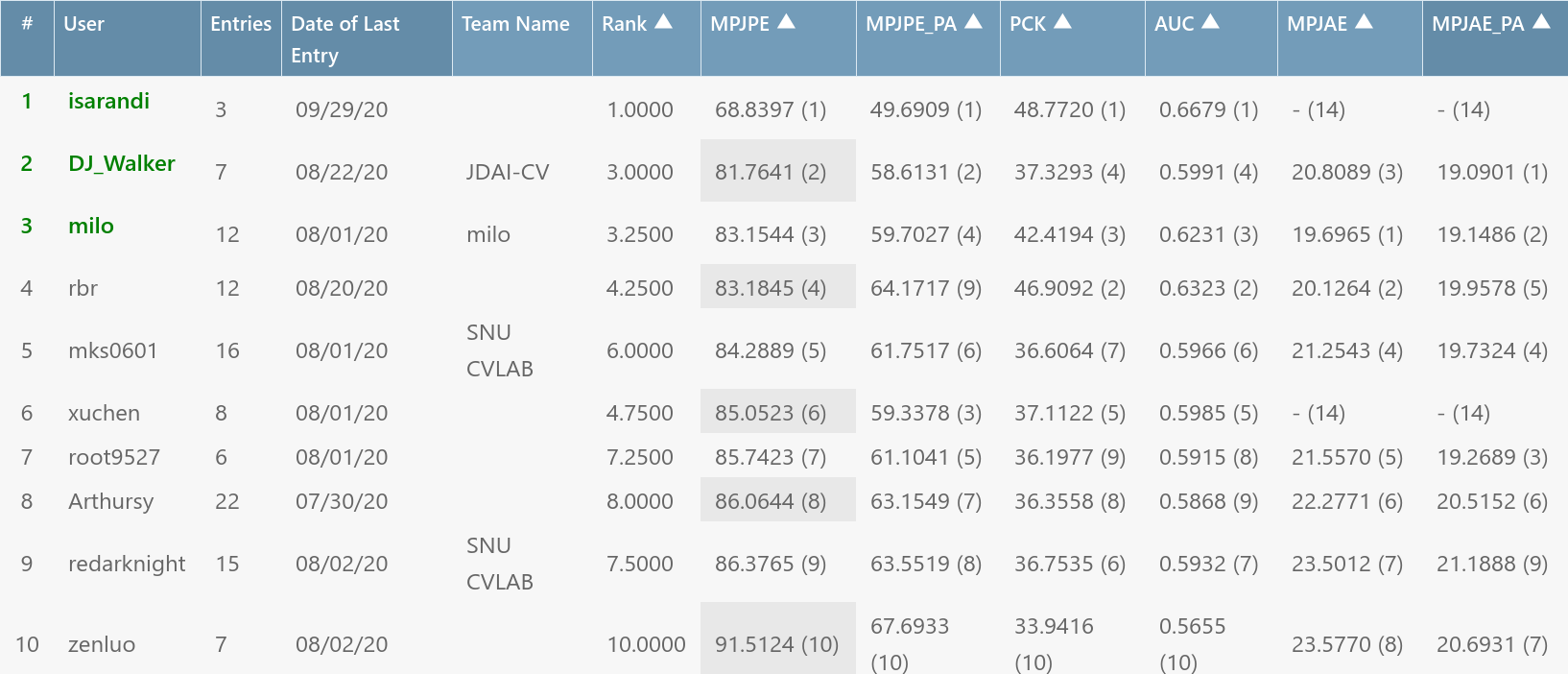

3DPW Benchmark

Our Challenge has established a benchmark for 3D Pose Estimation. To submit your results, please go to the 3DPW Challenge

Dataset

3DPW is collected by using IMUs and hand-held cameras. The hand-held camera is used to capture photographs of the volunteers wearing IMUs as they perform daily activities. The IMUs are used to compute the 3D pose of the volunteers. Each 3D pose is then assigned to detected 2D poses in the image. The method is further detailed in the ECCV'18 paper Recovering Accurate 3D Human Pose in The Wild Using IMUs and a Moving Camera. The dataset can be downloaded from our website

Below, you can find the video of the ECCV'18 paper associated with the 3DPW dataset:Call for papers

We mostly encourage papers on 3D human pose estimation which also participate in the challenge or evaluate on 3DPW using the provided protocols.We also invite papers in the following areas:

- 3D human pose and shape estimation

- 3D multi-person pose estimation

- 3D human texture completion and clothing reconstruction

- 3D human motion synthesis, prediction and analysis

Paper Submission

| Submission Deadline | August 1 |

| Challenge Deadline | August 1 |

| Reviews Due | August 7 |

| Notification of Acceptance | August 10 |

| Workshop | August 23 |

| Camera Ready Submission | September 10 |

Important Information:

- All deadlines are 5 PM Pacific time.

- Paper submissions should follow the exact same guidelines of ECCV, 14 pages plus references.

- Submissions can be uploaded to the CMT

- If you do not have one already, create an account for cmt3 and login.

- In case you are not directed to 3DPW submission, type 3DPW in the search box to find it.

- Accepted papers will be published in the proceedings of ECCV workshops.

Invited Speakers

Jitendra Malik

Gul Varol

Dushyant Mehta

Georgios Pavlakos

Tony Tung

Program [Central European Summer Time (UTC+2)]

| 15:00 | Welcome and introduction | |

|---|---|---|

| 15:10 | The Next Generation of Virtual Humans | Tony Tung |

| 15:50 | Challenge Runner Up Talk | Yu Sun |

| 16:05 | On Performance Evaluation and Limitations of Monocular RGB based Pose Estimation | Dushyant Mehta |

| 16:45 | Learning to Reconstruct 3D Humans | Georgios Pavlakos |

| 17:25 | Challenge Winner Talk | Istvan Sarandi |

| 17:40 | Invited Talk | Jitendra Malik |

| 18:10 | 3D Human Motion in Action | Gul Varol |

| 18:50 | Closing Remarks |

Organizers

Special Thanks

We would like to thank Timo von Marcard, Ikhsanul Habibie, and Mohamed Omran for useful discussions on evaluation metrics and protocols for 3D Human Pose Estimation.

We also thank Meysam Madadi and Sergio Escalera for help and support setting up the challenge in Codalab.