Combining Implicit Function Learning and Parametric Models for 3D Human Reconstruction

IP-Net pre-trained models and code

Bharat Lal Bhatnagar, Cristian Sminchisescu, Christian Theobalt and Gerard Pons-MollMax Planck Institute for Informatics, Saarland Informatics Campus, Germany

ECCV 2020 (Oral)

Abstract

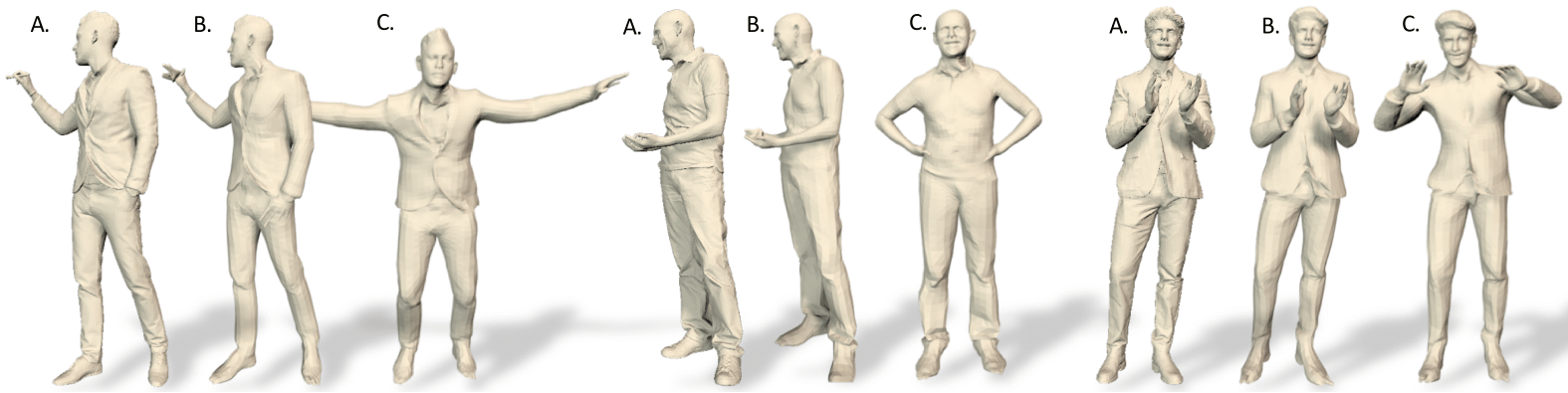

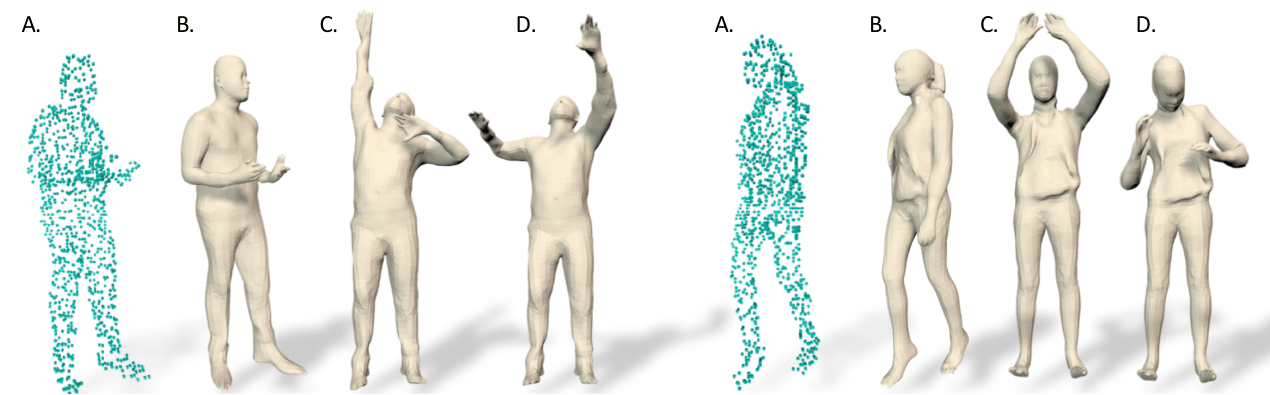

Deep learnt implicit functions, though powerful tools for reconstructing 3D surfaces, can immediately produce only static surfaces that are not controllable, e.g., in their ability to modify the resulting model by editing its pose or shape parameters. Such features are essential for both computer graphics and computer vision. In this work we present an approach that combines detail-rich implicit functions with parametric modelling in order to reconstruct 3D models of people that remain controllable even in the presence of clothing. Given a sparse 3D pointcloud of a dressed person, we use Implicit Part Network (IPNet) to jointly predict the outer 3D surface of the dressed person, the inner body surface, and the semantic correspondences to a parametric body model (SMPL). We subsequently use correspondences to fit the body model to our inner surface and then non-rigidly deform it (under a parametric body + displacement model) to the outer surface in order to capture garment, face and hair details. In quantitative and qualitative experiments with both full body data and hand scans (e.g. the MANO dataset) we show that the proposed methodology generalizes, and is effective even given incomplete pointclouds from single-view depth images.

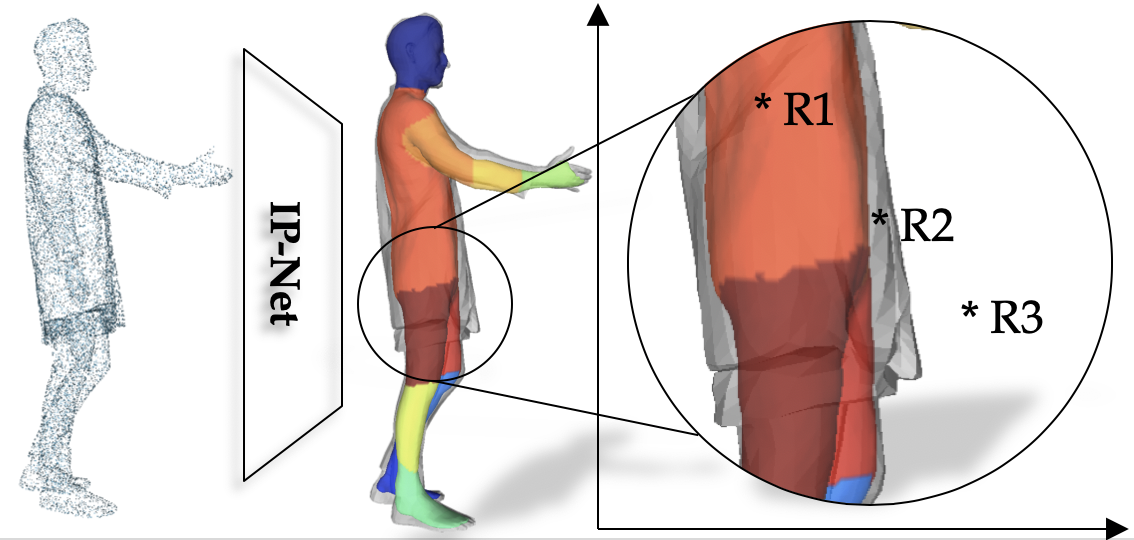

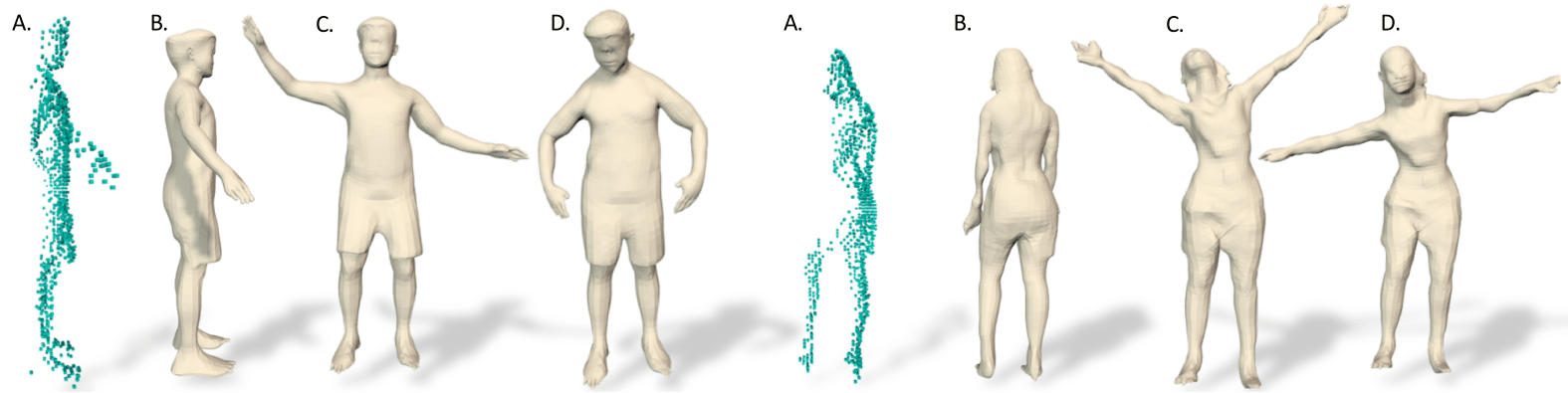

Overview: Given an input point cloud IP-Net predicts a double layered surface by classifing points in R^3 as lying: i) inside the body, ii) between the body and clothing, and iii) out side the clothing. This allows us to estimate not only the outer dressed surface but also body shape under clothing. IP-Net also predicts SMPL part correspondences which allows us to register SMPL+D to the implicit surface predicted by IP-Net, hence making our implicit reconstructions controllable.

Overview: Given an input point cloud IP-Net predicts a double layered surface by classifing points in R^3 as lying: i) inside the body, ii) between the body and clothing, and iii) out side the clothing. This allows us to estimate not only the outer dressed surface but also body shape under clothing. IP-Net also predicts SMPL part correspondences which allows us to register SMPL+D to the implicit surface predicted by IP-Net, hence making our implicit reconstructions controllable.

Citation

@inproceedings{bhatnagar2020ipnet,

title = {Combining Implicit Function Learning and Parametric Models for 3D Human Reconstruction},

author = {Bhatnagar, Bharat Lal and Sminchisescu, Cristian and Theobalt, Christian and Pons-Moll, Gerard},

booktitle = {European Conference on Computer Vision ({ECCV})},

month = {August},

organization = {{Springer}},

year = {2020},

}